Zone 1: Structure of Spatial Experience¶

The space that you experience around yourself always has some structure, even when you are surrounded by darkness or dense fog. The otherwise “empty” space has its up and down, left and right, back and front. And when you can see anything at all, you don’t see every part of the surroundings equally well. This unequal visibility of your environment is dynamic - it changes as you move your gaze or walk. As you move, some parts of the surroundings become more visible, while other parts fade out. Projects in this zone attempt to derive a comprehensive picture of this dynamics using the notion of solid fields of visibility.

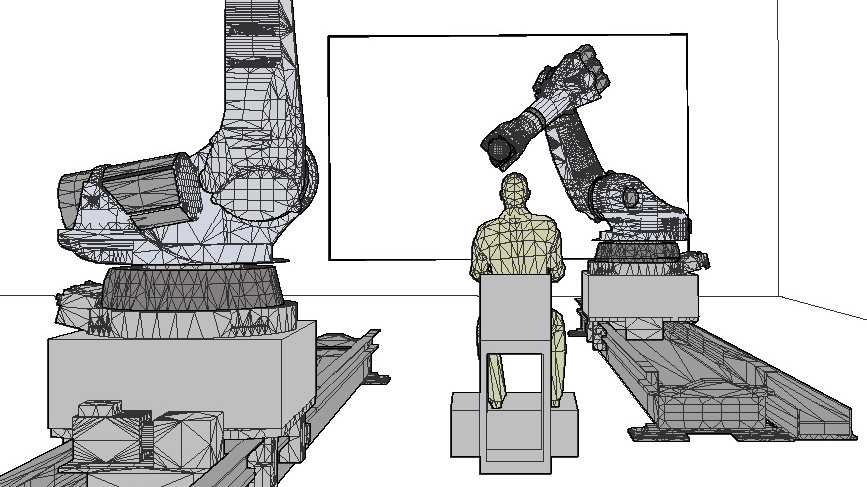

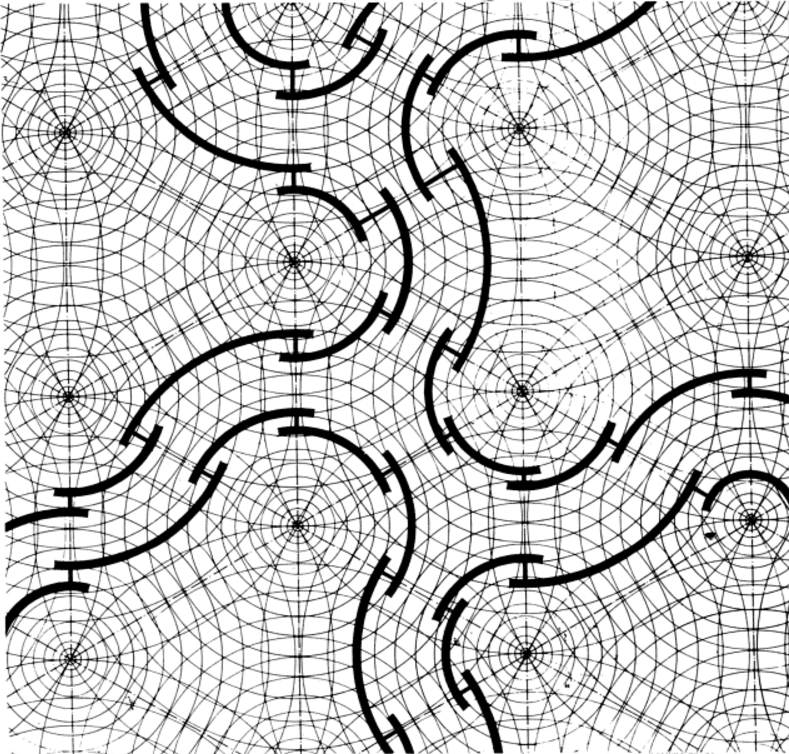

The plan view of a hypothetical “perceptual field” generated by an arrangement of curved walls. This drawing by Paolo Portoghesi is reproduced from Rudolf Arnheim’s The dynamics of architectural form (1977, p. 30).

Solid Field of Visibility¶

Basic studies of visual perception in sensory psychophysics and systems neuroscience led to a number of models of visibility. We use these models to predict the organization of spatial experience: for the observer who can be static or dynamic, surrounded by objects that can move or stand still.

Briefly, an object in your environment can be seen from some locations and not from others. The totality of locations from which the object is visible is the solid region of visibility of that object. Multiple solid regions for all the objects in any given environment make up the solid field of visibility of that environment.

The one who knows the solid field of visibility of a world - physical, virtual or mixed - has in their hands a complete description of the boundaries of visual experiences for that world. What is more, knowing the solid field of visibility for a virtual or mixed world gives one the power to tweak the field and thus control the flow of narrative or engagement of the user.

Approach. This work has two parts, predictive and empirical. First, we derive predictions of the solid field of visibility from the models of visibility derived by basic vision science from measurements on 2D screens. Then we test these predictions in studies of different 3D spaces: physical, virtual and mixed, as described below.

Human side of the light field. Notably, the solid field of visibility can be thought of as a sensory equivalent of the light field: a radiometric quantity that fully characterizes the optical properties of any environment. The light field of an environment describes the amount of light flowing in every direction through every point in space. The solid field of visibility of an environment describes what parts of the environment are visible from every point in space, in every viewing direction. In other words, the field of visibility tells us which aspects of the light field are usable by the human observer.

Layer 1: Physical Spaces¶

Proof of concept¶

Our first proof of concept was established in a large physical space, using large-scale robotics, supported by the inaugural Harold Hay award from the Academy of Neuroscience for Architecture. This project is a collaboration between several disciplines:

- psychophysics and neuroscience

- supervised by Sergei Gepshtein at the Salk Institute for Biological Studies,

- production design and world building

- supervised by Alex McDowell at USC World Building Media Lab,

- architectural and urban design

- supervised by Greg Lynn at UCLA Department of Architecture and SUPRASTUDIO.

Using the computational and experimental tools developed at the Salk Institute, the research team conducted a series of studies at USC and UCLA. The goal was to reveal the visual organization of the spatial experience generated by large built environments.

The study followed the format of a psychophysical experiment. Participants viewed static and dynamic visual patterns displayed on large physical surfaces propelled through space by industrial robots. Using a variety of visual tasks – detection, discrimination and direct reports – spatial and temporal characteristics of visual perception were measured using visual patterns rendered on a static screen or a screen moving to and fro the observer. In addition to viewing distance, the rate of movement within the pattern and the speed of movement of the screen were varied.

This work set a precedent for mapping spatial and temporal boundaries of perception in large spaces, and it was a step toward the numerous forthcoming studies in architectural and urban design, in virtual architecture, mixed reality, and other immersive media.

Layer 2: Virtual and Mixed Spaces¶

Similar to the study in the physical space described above, we study limits of experience in virtual and mixed environments in two important special cases. The first case is the fully synthetic environment rendered by means of a head mounted display (HMD). The second case is the physical environments reduplicated in an HMD by means of head-mounted world cameras but enriched by digital content.

Layer 3: Modulations and Generalizations¶

In Layers 1-2 of this Zone, we discover the envelope of possibility for visual experience: the boundaries between visible and invisible aspects of any environment. In normal living perception, these boundaries are modulated by the person’s attention, expectations, prior experience, and expertise.

The subsequent cluster of projects in this Zone will address such modulations of the envelope of experience, separately in physical and mixed environments. Then our goal will be to generalize this approach to other visual modalities, such as stereoscopic vision, and study the many counterparts of the concept of solid field in other senses, starting with audition.